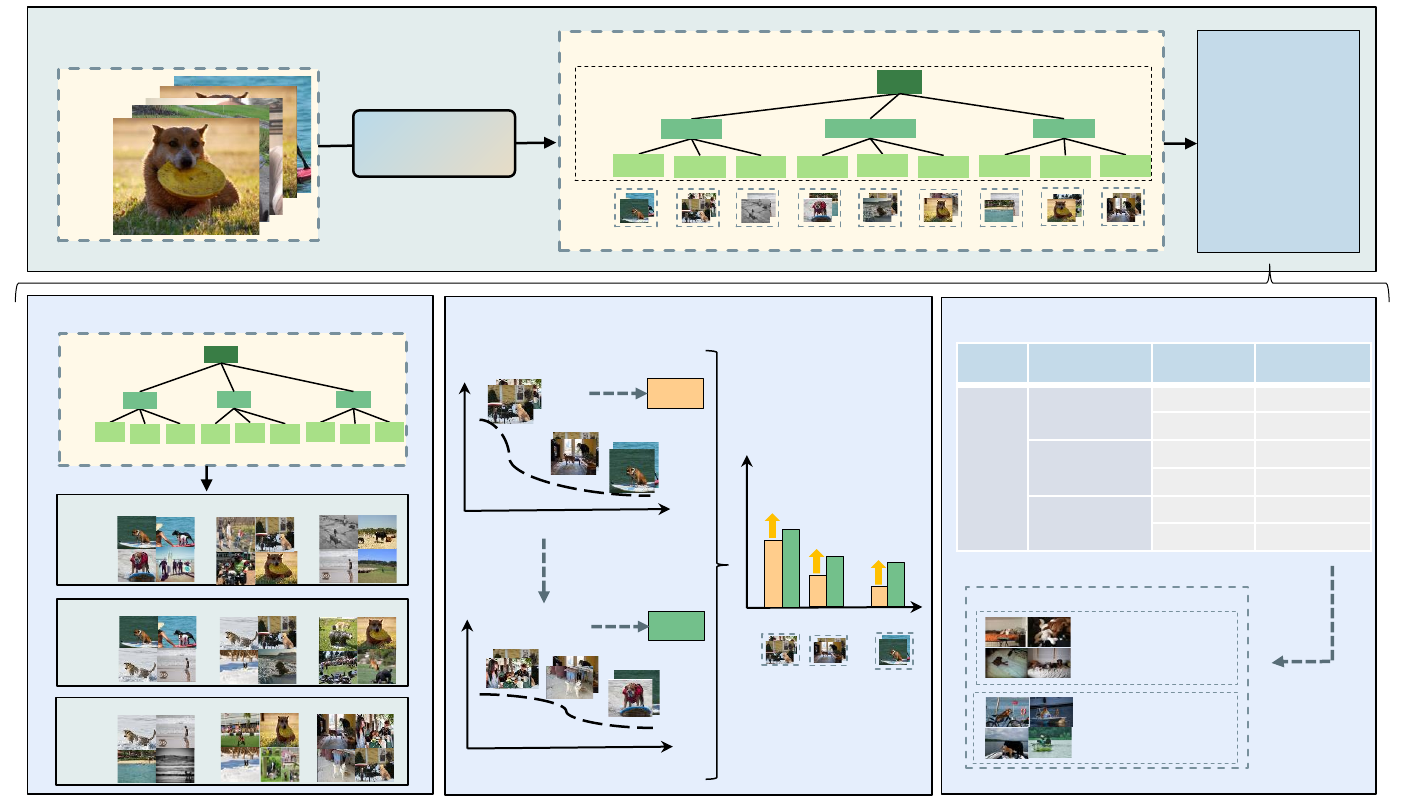

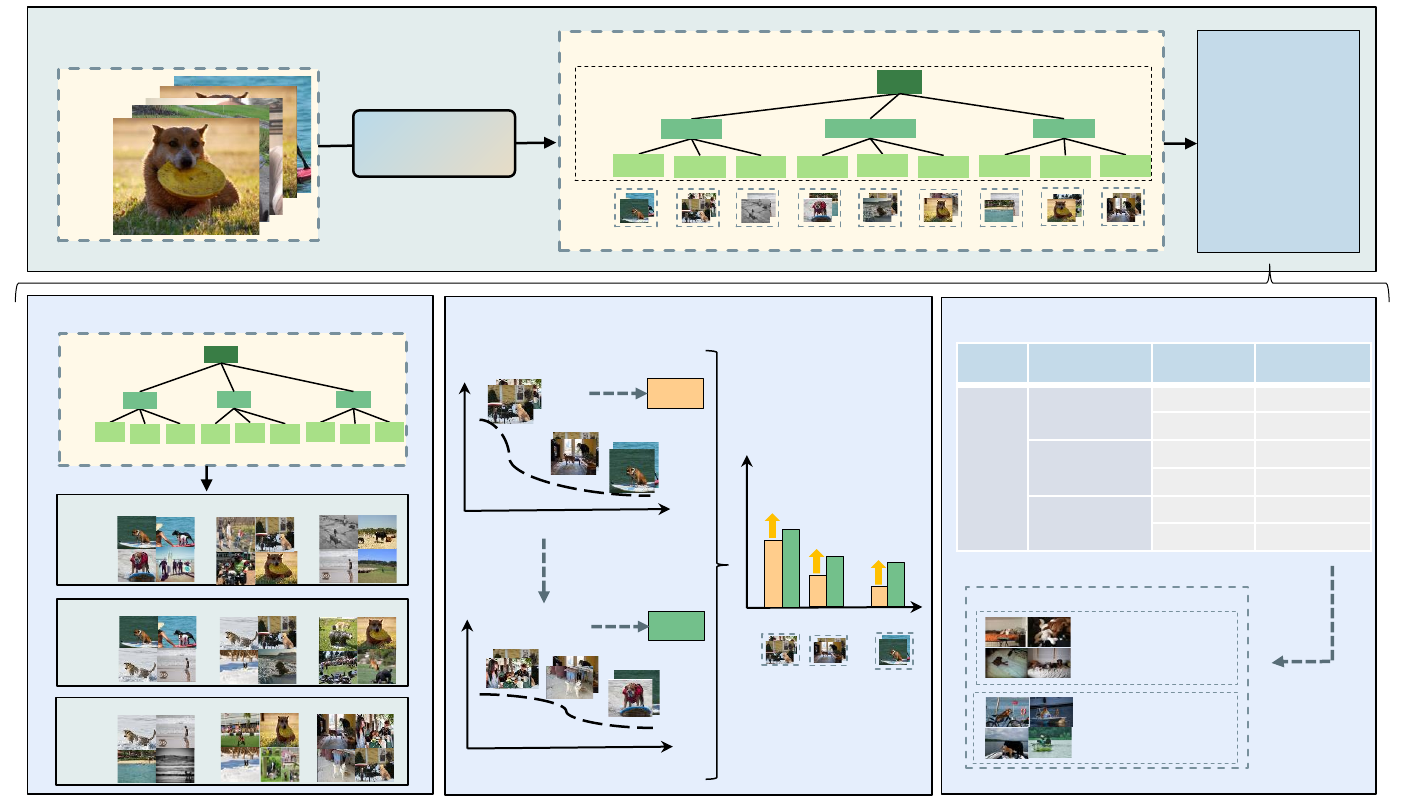

Fig 1: (A) The Workflow of Subpopulation Structure Discovery with Large Language Models (SSD-LLM). SSD-LLM can further support several downstream tasks including: (B) Dataset Subpopulation Organization; (C) Subpopulation Shift; (D) Slice discovery

Fig 1: (A) The Workflow of Subpopulation Structure Discovery with Large Language Models (SSD-LLM). SSD-LLM can further support several downstream tasks including: (B) Dataset Subpopulation Organization; (C) Subpopulation Shift; (D) Slice discovery

🔥 Large language models can be served as dataset analysts. We can utilize their extensive world knowledge and summarization capabilities to extract valuable insights from massive information, which can be beneficial to model training.

🔥 We propose the concept of subpopulation structure to represent, analyze, and utilize subpopulation distributions within datasets. This concept is crucial for solving many subpopulation-related tasks, including dataset subpopulation organization, subpopulation shift, and slice discovery.

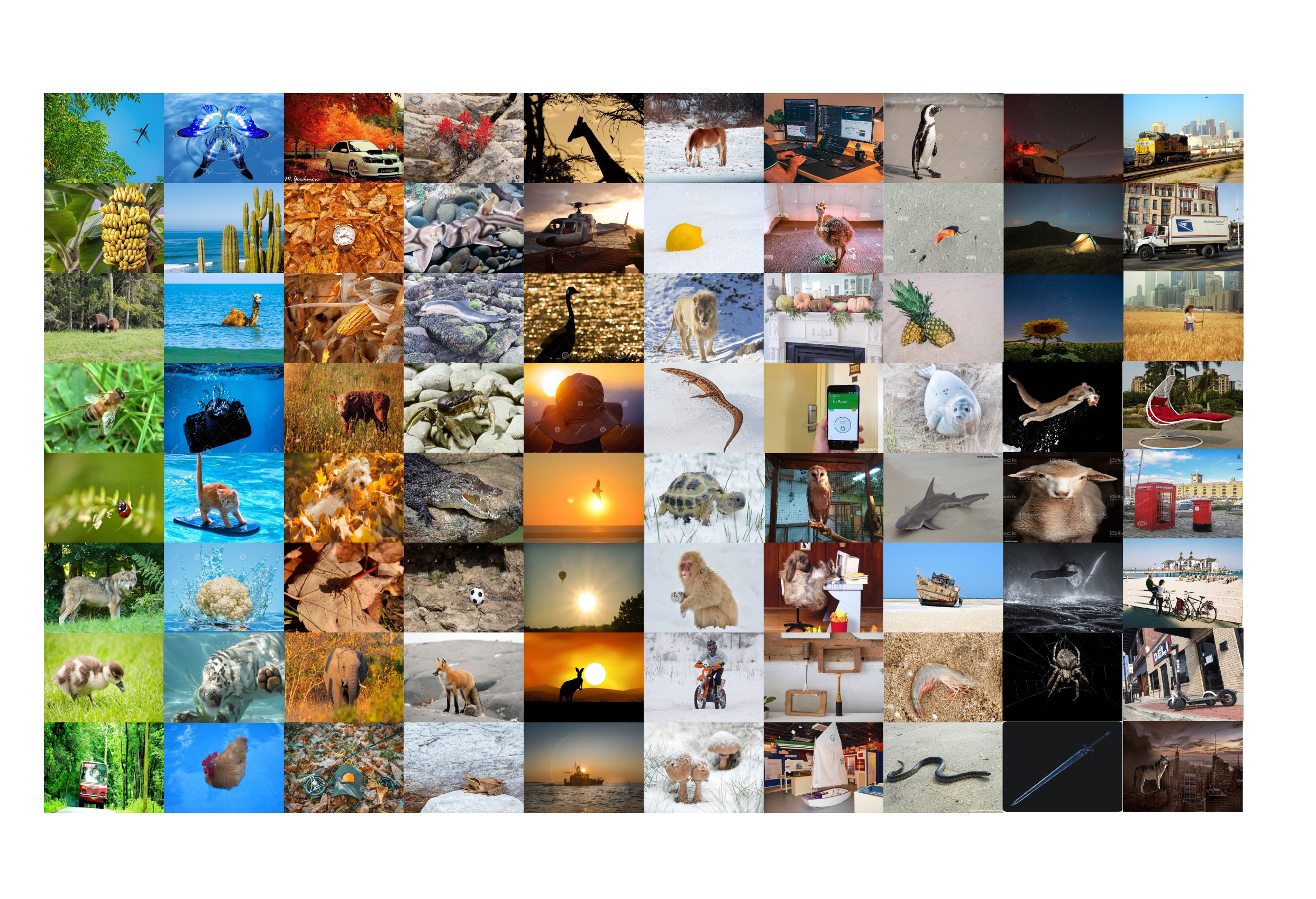

The distribution of subpopulations is an important property hidden within a dataset. Uncovering and analyzing the subpopulation distribution within datasets provides a comprehensive understanding of the datasets, standing as a powerful tool beneficial to various downstream tasks, including Dataset Subpopulation Organization, Subpopulation Shift, and Slice Discovery. Despite its importance, there has been no work that systematically explores the subpopulation distribution of datasets to our knowledge.

To address the limitation and solve all the mentioned tasks in a unified way, we introduce a novel concept of subpopulation structures to represent, analyze, and utilize subpopulation distributions within datasets. To characterize the structures in an interpretable manner, we propose the Subpopulation Structure Discovery with Large Language Models (SSD-LLM) framework, which employs world knowledge and instruction-following capabilities of Large Language Models (LLMs) to linguistically analyze informative image captions and summarize the structures. Furthermore, we propose complete workflows to address downstream tasks, named Task-specific Tuning, showcasing the application of the discovered structure to a spectrum of subpopulation-related tasks, including dataset subpopulation organization, subpopulation shift, and slice discovery.

With the help of SSD-LLM, we can structuralize the datasets into subpopulation-level automatically, achieve average +3.3% worst group accuracy gain compared to previous methods on subpopulation shift benchmark Waterbirds, Metashift and Nico++, and also identify more consistent slice topics with a higher model error rate of 3.95% on slice discovery task for ImageNet.

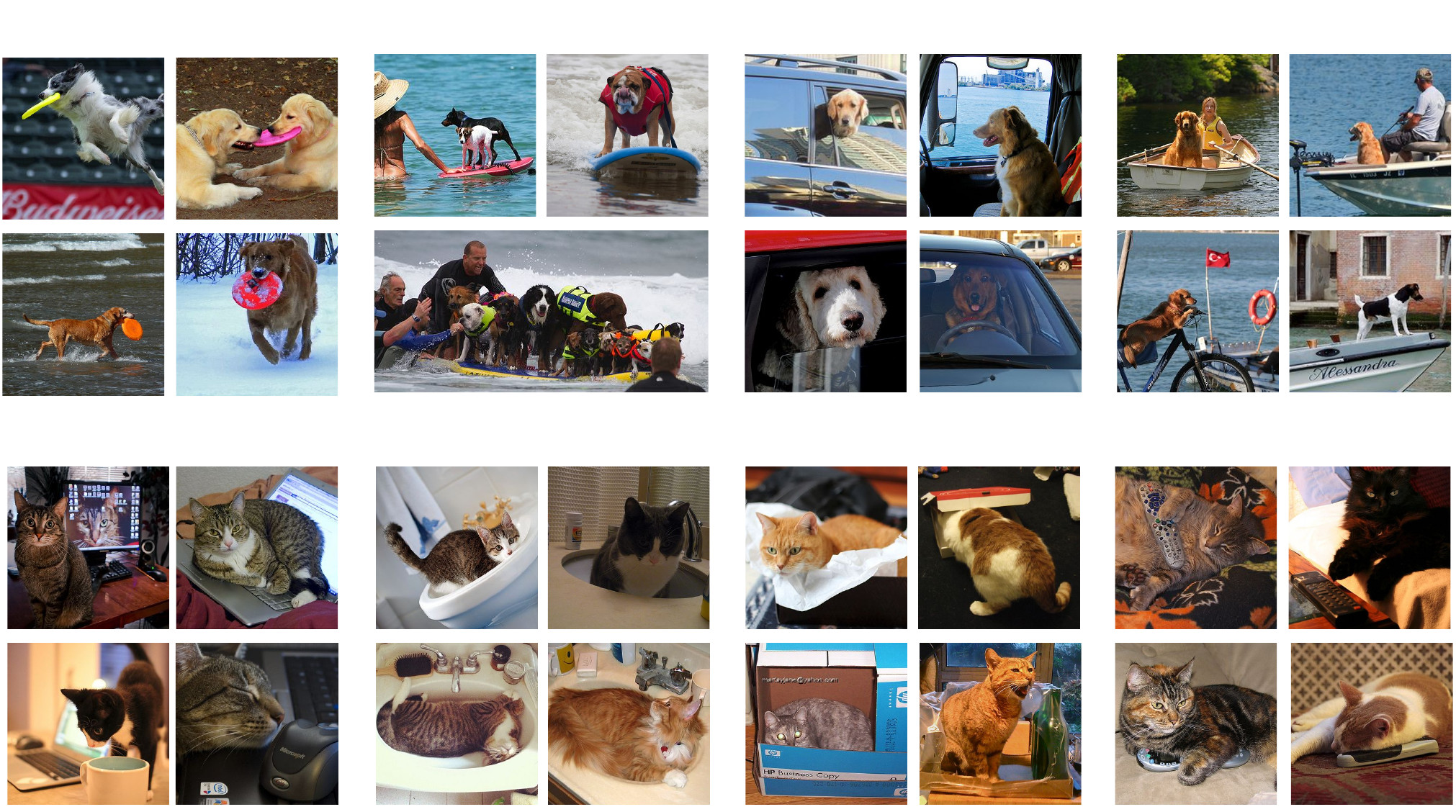

Subpopulation is defined by a set of data points that share common characteristics [2]. For example, for class "Cat", the black cat and the white cat can be seen as different subpopulation.

Because many tasks are subpopulation-related. For example,

Image clustering conditioned on text criteria [1] is to partition an image dataset into different subpopulations based on user-specified criteria.

Studying subpopulation shift [2] is to mitigate the negative impact of imbalanced subpopulation distributions in the training set on the model.

Fig 3. Different types of subpopulation shift [2], which occurs when the proportion of some subpopulations between training and deployment changes, and is shown to be of significant influence to model performances

Slice discovery [5, 7] is aimed at identifying subpopulations model underperform.

Fig 4. An overview of the typical process for slice discovery, which aims at identifying subpopulation where the model underperforms [7].

Summarizing the commonalities of these tasks, we find that analyzing the subpopulation distribution is the key to solving all these problems. If the subpopulation distribution can be characterized, image clustering results under different criteria are naturally obtained [1], additional images can be supplemented to rare subgroups to balance the whole dataset [8], and slices can be easily discovered by statistics error rate on validation set [9].

Despite its importance, existing work lacks systematic exploration of subpopulation distribution.

1. We introduce the concept of subpopulation structure to characterize subpopulation distribution in an interpretable manner for the first time.

2. We propose class-dimension-attribute-subpopulation structure, reducing the attribute confusion of the current class-attribute-subpopulation structure.

3. We propose Subpopulation Structure Discovery with Large Language Model (SSD-LLM) framework to uncover the underlying subpopulation structure of datasets automatically, with two elaborate prompt engineering components Criteria Initialization and Criteria Refinement.

4. We provide methods for Task-specific Tuning, enabling the application of the structures across a spectrum of subpopulation-related tasks, including dataset subpopulation organization, subpopulation shift, and slice discovery.

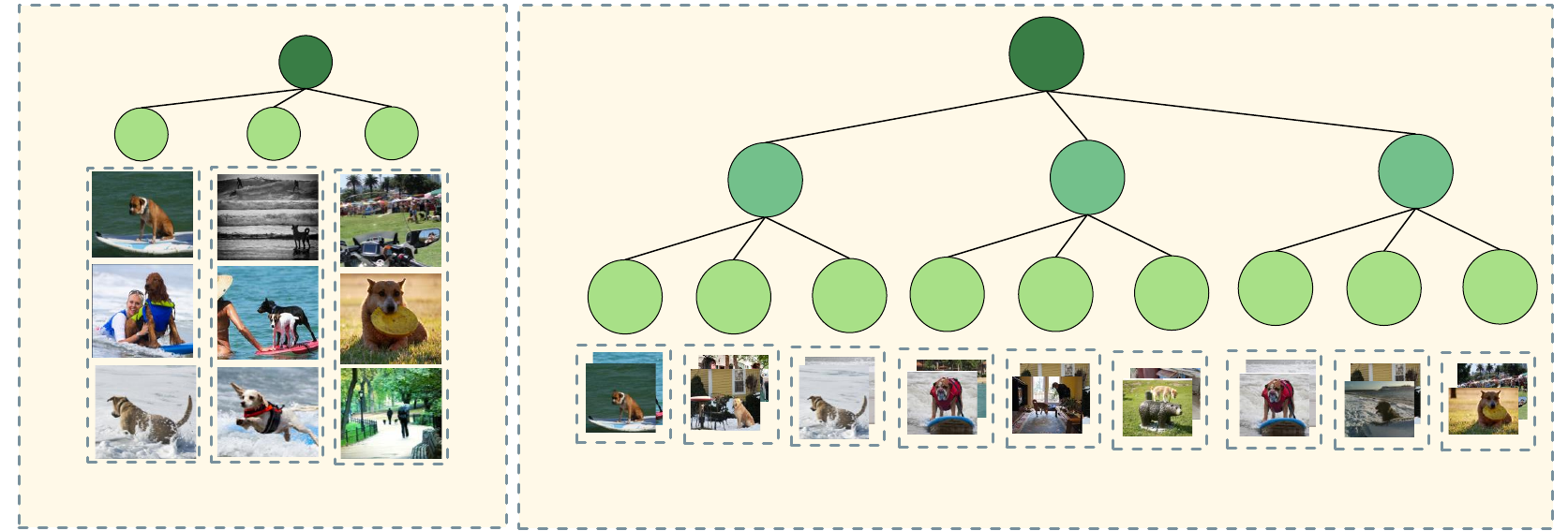

Subpopulation structure is a set of hierarchical relations among several subpopulations determined by certain criteria. Subpopulation structure can characterize subpopulation distribution in an interpretable manner.

Former works like Metashift [3] and NICO++ [4] construct image datasets including subpopulation information, which organizes the images with respect to some extra attributes, and can be viewed as a class-, attribute-, subpopulation-layer structure. The problem of such a structure is ignoring the category of attributes (or Dimension), leading to attribute inconsistency and confusion.

To solve this issue, we introduce a class-, dimension-, attribute-, and subpopulation-layer structure. By articulating the classification dimensions, this improved structure provides more nuanced attribute assignments.

Fig 7. Metashift has the same-level attributes Surfboard, Water, and Grass for class Dog, which is irrational due to the possible overlap. As an improvement, we take dimensions into consideration. The class Dog has dimensions including Action, Co-occurrence Object, Location, etc., and in dimension Location, it includes various attributes like Water, Grass, etc, which offers a more appropriate assignment for the samples.

Key Information Extraction approach must be capable of extracting key information from images and summarizing essential content from extensive texts.

World Knowledge The approach necessitates comprehensive world knowledge, enabling a broad understanding of various aspects of the datasets, including diverse categories, common attributes, and the relationships between dimensions and attributes.

Our solution: MLLM Caption + LLM Summary !

Fig 8: Subpopulation Structure Discovery with Large Language Model (SSD-LLM). (Step 1) Multimodality Large Language Model (MLLM) extracts informative captions from images. (Step 2) LLM initializes the criteria with a sample-based generate-and-select paradigm. (Step 3) LLM refines the criteria using self-consistency as an indicator. (Step 4) LLM assigns each caption with specific attributes according to the refined criteria, uncovering the intrinsic subpopulation structures hidden in the dataset. The resulting criteria and subpopulations are used in several downstream tasks.

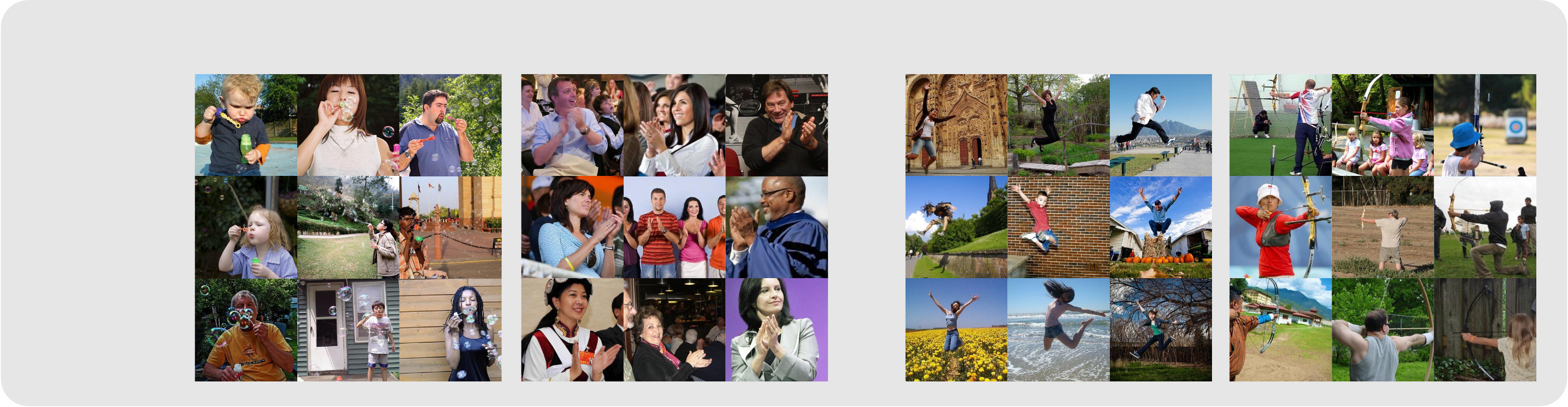

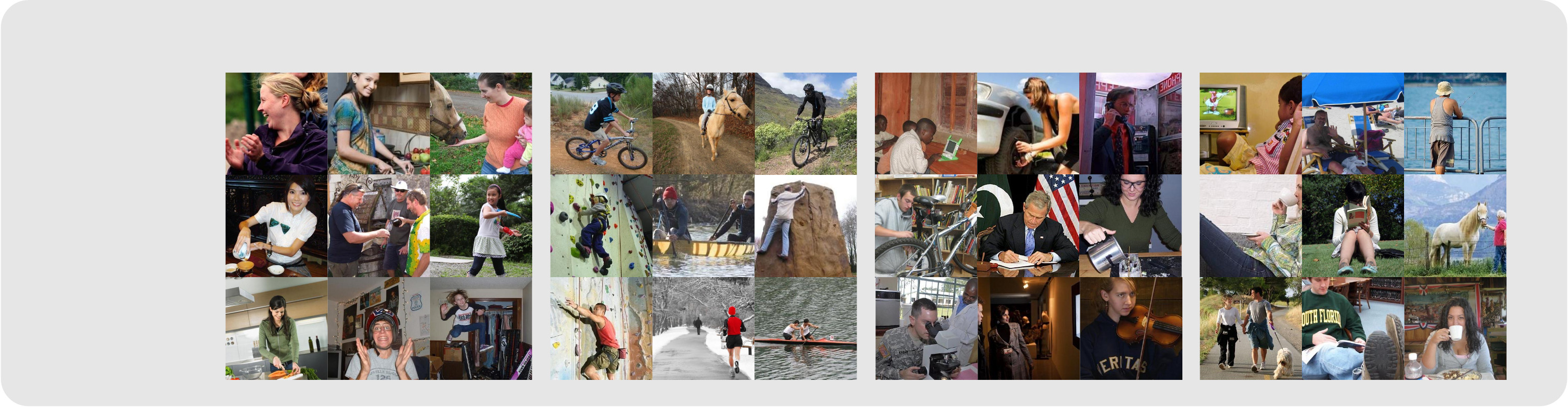

The discovered subpopulation structure can be used to organize datasets, and the organization quality is evaluated using the task of image clustering conditioned on human-specified criteria (ICTC). Specifically, when organizing the subpopulations of a given image dataset, we first select out the relevant dimensions and then attach attributes assigned by SSD-LLM directly to the images accordingly.

SSD-LLM, combined with image generation, offers a solution to better handle the scenarios of subpopulation shifts. After we apply SSD-LLM to the datasets, we collect statistics of the number of images contained in each subpopulation and utilize diffusion model to generate images for underrepresented subpopulations. Specifically, we first sample attributes from the subpopulation structure for each underrepresented subpopulation and then employ LLM to make complete sentences based on these words as the input prompt of a diffusion model. The diffusion model generates images augmented to the image dataset, which helps to achieve balanced classes and attributes. Moreover, we propose to harness an LLM to suggest extra dimensions and attributes based on the current sets in this task for enriched subpopulation structure, generating more diverse images.

Fig 10. Subpopulation Shift. We balance classes and attributes by generating images for underrepresented subpopulations using a diffusion model, which enhances the model's performance on long-tail distributions.

SSD-LLM conducts slice discovery for an image dataset with the help of the assigned attributes. In detail, we first calculate the error rates on all subpopulations discovered with the SSD-LLM. Then we identify out the subpopulations with the highest error rate and use the LLM to summarize out discriptions based on the attributes of the subpopulations in the form of texts representing the slice topics

Fig 11. Slice Discovery. Subpopulation in the validation set with higher error rate will be identified as the recommend slice topic.

| Dataset | Criterion | SCAN* | IC|TC | Ours |

|---|---|---|---|---|

| Stanford 40 Action | Action | 0.346 | 0.747 | 0.817 |

| Location | 0.357 | 0.671 | 0.705 | |

| Mood | 0.276 | 0.746 | 0.768 | |

| Place365 | Place | 0.332 | - | 0.696 |

| PPMI | Musical Instruction | 0.598 | 0.934 | 0.955 |

| Cifar10 | Object | 0.839 | 0.911 | 0.921 |

| STL10 | Object | 0.798 | 0.986 | 0.988 |

| Type | Method | Average Accuracy | Worst Group Accuracy | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Waterbirds | Metashift | Nico++ | Average | Waterbirds | Metashift | Nico++ | Average | ||

| Vanilla | ERM | 84.1 | 91.2 | 76.3 | 83.7 | 69.1 | 82.1 | 17.8 | 56.3 |

| Subgroup Robust Methods | GroupDRO | 86.9 | 91.5 | 74.0 | 84.1 | 73.1 | 83.1 | 12.2 | 56.1 |

| JTT | 88.9 | 91.2 | 77.5 | 85.9 | 71.2 | 82.6 | 15.6 | 56.5 | |

| LfF | 86.6 | 80.4 | 77.5 | 81.5 | 75.0 | 72.3 | 15.6 | 54.3 | |

| LISA | 89.2 | 91.4 | 75.0 | 85.2 | 77.0 | 79.0 | 18.9 | 58.3 | |

| Imbalanced Learning | Resample | 86.2 | 92.2 | 77.3 | 85.2 | 70.0 | 81.0 | 16.7 | 55.9 |

| Reweight | 86.2 | 91.5 | 73.8 | 83.8 | 71.9 | 83.1 | 12.2 | 55.7 | |

| Focal | 89.3 | 91.6 | 73.1 | 84.7 | 71.6 | 81.0 | 16.7 | 56.4 | |

| CBLoss | 86.8 | 91.4 | 76.3 | 84.8 | 74.4 | 83.1 | 12.2 | 56.6 | |

| BSoftmax | 88.4 | 91.3 | 74.2 | 84.6 | 74.1 | 82.6 | 16.7 | 57.8 | |

| Traditional Data Augmentation | Mixup | 89.2 | 91.4 | 73.0 | 84.5 | 77.5 | 79.0 | 14.4 | 57.0 |

| RandAug | 86.3 | 90.9 | 72.0 | 83.1 | 71.4 | 80.9 | 16.7 | 56.3 | |

| Diffusion | Class Prompt | 85.9 | 91.5 | 78.0 | 85.1 | 71.3 | 82.7 | 18.5 | 57.5 |

| Class-Attribute | 89.1 | 91.4 | 78.6 | 86.4 | 73.5 | 83.8 | 18.8 | 58.7 | |

| CiP | 88.0 | 91.1 | 78.3 | 85.8 | 73.5 | 82.4 | 19.3 | 58.4 | |

| LLM+Diffusion | SSD-LLM (Ours) | 90.5 | 93.0 | 80.4 | 88.0 | 79.1 | 84.8 | 22.1 | 62.0 |

| Method|Categories | Boat | Bird | Car | Cat | Dog | Truck | Topic Error Rate |

|---|---|---|---|---|---|---|---|

| ImageNet | 4.33 | 0.81 | 11.33 | 11.14 | 0.69 | 11.71 | 6.72 |

| General Prompt | 47.82 | 12.11 | 43.55 | 14.22 | 10.19 | 12.65 | 23.42 |

| GPT-Suggest | 57.55 | 12.87 | 43.59 | 12.71 | 16.34 | 28.12 | 28.53 |

| Domino(Bert) | 76.26 | 42.26 | 54.21 | 33.89 | 24.50 | 29.54 | 43.44 |

| B2T | 77.62 | 30.04 | 58.17 | 36.36 | 19.80 | 33.47 | 42.58 |

| SSD-LLM (Ours) | 79.31 | 45.67 | 60.34 | 32.97 | 26.48 | 39.57 | 47.39 |

For future works, we suggest the following promising directions:

The four-layer subpopulation structure can be expanded to more suitable structures according to specific task requirements.

SSD-LLM can have more applications in various computer vision and multimodality tasks, e.g. object detection and VQA.

The subpopulation structure obtained from SSD-LLM holds the potential to guide dataset construction with better fairness [10] or further supporting the construction of unbiased datasets [11].

The core procedures of SSD-LLM, using LLM to conduct group-level summarizations, can be extended to more types of contents including patterns of model hallucinations.

[1] Sehyun Kwon, et al. “Image Clustering Conditioned on Text Criteria." ICLR 2024.

[2] Yuzhe Yang, et al. “Change is Hard: A Closer Look at Subpopulation Shift." ICML 2023.

[3] Liang, Weixin, and James Zou. "Metashift: A dataset of datasets for evaluating contextual distribution shifts and training conflicts." ICLR 2022.

[4] Zhang, Xingxuan, et al. "Nico++: Towards better benchmarking for domain generalization." CVPR 2023.

[5] Sabri Eyuboglu, et al. "Domino: Discovering Systematic Errors with Cross-Modal Embeddings." ICLR 2022.

[6] Irena Gao, Gabriel Ilharco, et al. "Adaptive Testing of Computer Vision Models." ICCV 2023.

[7] Johnson N, et al. "Where does my model underperform? a human evaluation of slice discovery algorithms." AAAI 2023.

[8] Dunlap L, et al. "Diversify your vision datasets with automatic diffusion-based augmentation." NeurIPS 2023.

[9] Chen M, et al. "HiBug: on human-interpretable model debug." NeurIPS 2024.

[10] Wang A, et al. "REVISE: A tool for measuring and mitigating bias in visual datasets." IJCV 2022.

[11] Liu Z, He K. "A Decade's Battle on Dataset Bias: Are We There Yet?" Arxiv 2024.

@inproceedings{luo2025llm,

title={LLM as dataset analyst: Subpopulation structure discovery with large language model},

author={Luo, Yulin and An, Ruichuan and Zou, Bocheng and Tang, Yiming and Liu, Jiaming and Zhang, Shanghang},

booktitle={European Conference on Computer Vision},

pages={235--252},

year={2025},

organization={Springer}

}

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.